Azure Functions: Processing CSV file uploaded to Azure BLOB Storage

In this article, we will see how to process the CSV file uploaded to Azure Blob Storage using Azure Functions. The Azure Function is a serverless solution using which we can perform server-side tasks using less code with low infrastructure maintenance. This helps to save the cost and makes us free from worrying about deploying and maintaining servers for running our code on Azure.

Why Azure Functions?

The software applications often need to execute some tasks based on events e.g. when an HTTP request is received process data on some complex business rules when the file is uploaded to the Storage compress the file to save the space or process a large volume of the data when it is updated. In all these cases it is recommended that we must execute a background process to perform such complex operations based on triggering events. The Azure Function is exactly used for such background tasks.

Please note that to implement the code for this article, the Azure Subscription is required. You can visit this link to register for free.

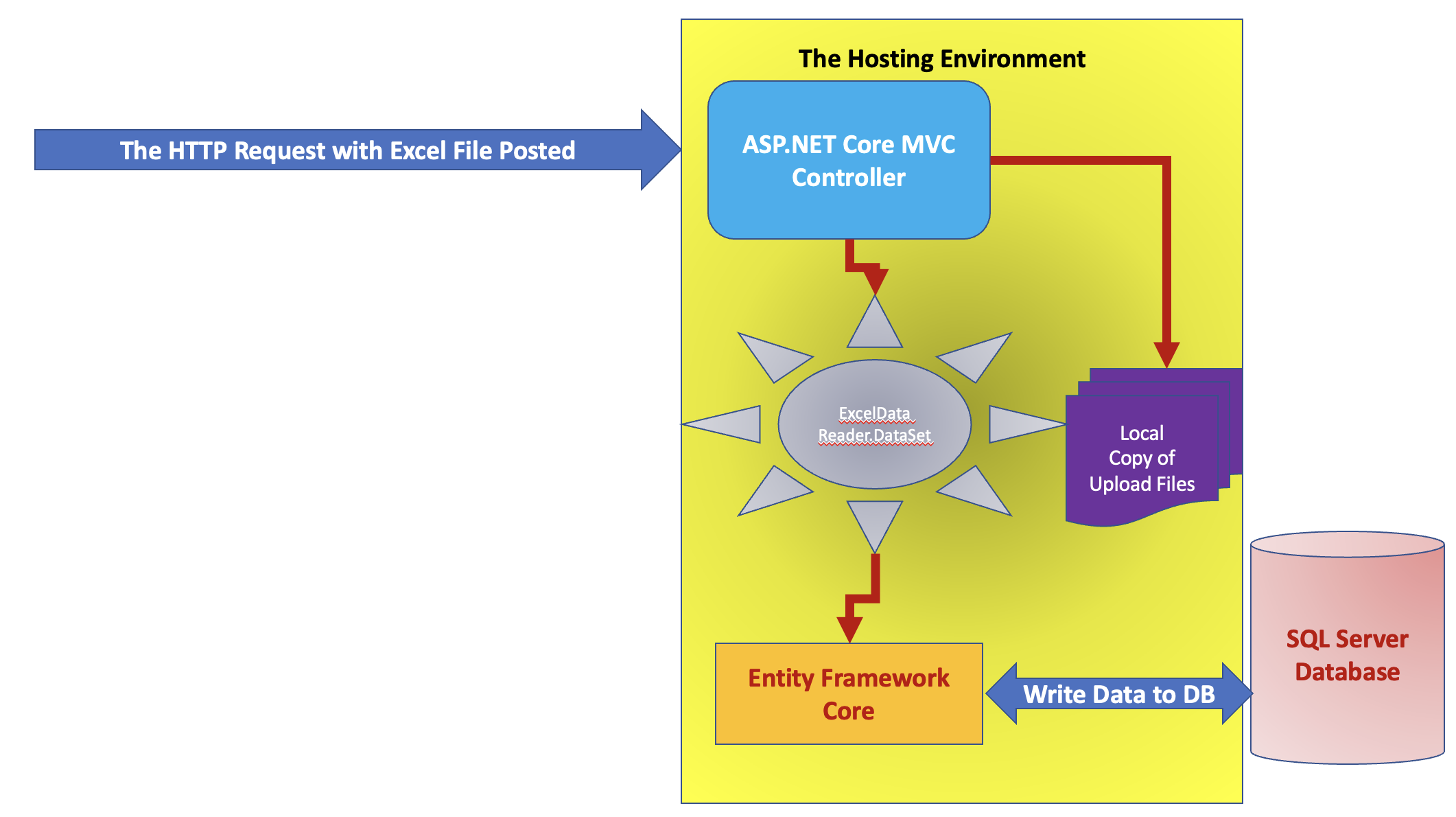

For this article, I have chosen a used case where a CSV file is uploaded to the Azure Blob storage using WEB API and once this file is uploaded, the Azure Functions is triggered to read the file and process it to store records in Azure SQL Database. Now here the question is can we not simply perform these tasks using WEB API, of-course we can but the problem will be if the CSV file is large then more time will be required to process this file and hence the performance will be impacted. So it is better to use API to upload the file to Azure Blob and then the Azure Function will be triggered to process the file from Azure Blob. This will free the WEB API from performing the complex task of processing the file and block the request execution till the processing is not completed. Figure 1 will explain an idea of the implementation.

Figure 1: Using API to Upload File to Azure BLOB Storage and Azure Function to Process the BLOB

If you are not aware of the creation of Azure Blob storage, then I have written an article on it which you can read from this link. Create a container in the Blob Storage and name it as csvcontainer. Also, copy the Connection String of the Storage so that we can use it in the Web API project to access the storage. I have already created the database with the name PersonDb on Azure SQL and the table is created using the script shown in listing 1

Create Table PersonInfo( BusinessEntityID int Primary Key, PersonType varchar(50) Not Null, NameStyle int not null, FirstName varchar(100) not null, LastName varchar(100) not null, EmailPromotion int Not null )

Listing 1: The SQL Database

Note that, after creating the Database and tables in Azure SQL, you need to set the networking by configuring an IP address of your machine so that you will be able to access the database and table. Figure 2 shows the network settings option for the database

Figure 2: Networking in Azure SQL

Click on the Show network settings, this will navigate to the Networking page where firewall rules can be set as shown in Figure 3

Figure 3: Settings Firewall Rues

Make sure that the checkbox for All Azure services and resources to access this server is checked so that azure deployed API and Azure Functions will be able to access this database server.

Step 1: Open Visual Studio 2022 and create a Blank Solution. Name this solution as Azure_FunctionFileUpload. In this solution add a new ASP.NET Core Web API project targetted to .NET 6. Name this project Core_UploadAPI. IN this project add Azure.Storage.Blobs Nuget Package reference to access the Azure Blob Storage. In this project add a new folder and name it Files. This folder will be used to store files uploaded by the end-user to Web API.

Step 2: Since will be accessing the Azure Blob storage, we need to provide the BLOB connection string to the project. Modify the appsettings.json file and define custom keys for Connection String and container name as shown in Listing 2

"ConnectionString": "[YOUR-CONNECTION-STRING-HERE]", "ContainerName": "csvcontainer"

Listing 2: The Connection String and container name

Step 3: In this project add an empty API Controller, name this as CSVFileUploadController, and add the code in it as shown in Listing 3

using Azure.Storage.Blobs;

using Microsoft.AspNetCore.Mvc;

namespace Core_UploadAPI.Controllers

{

[Route("api/[controller]")]

[ApiController]

public class CSVFileUploadController : ControllerBase

{

private readonly IConfiguration _configuration;

public CSVFileUploadController(IConfiguration configuration)

{

_configuration = configuration;

}

[HttpPost("file/upload")]

public async Task<IActionResult> UploadFile([FromForm] IFormFile file)

{

try

{

string fileName = file.FileName;

string connectionString = _configuration.GetValue<string>("ConnectionString");

string contaiernName = _configuration.GetValue<string>("ContainerName");

if (!CheckFileExtension(file))

{

return BadRequest($"The File does not have an extension or it is not image. " +

$"The Expected extension is .csv");

}

if (!CheckFileSize(file))

{

return BadRequest($"The size of file is more than 10 mb, " +

$"please make sure that the file size must be less than 10 mb");

}

// Create a BLOB Container if not exist

BlobContainerClient blobContainerClient = new BlobContainerClient(connectionString, contaiernName);

await blobContainerClient.CreateIfNotExistsAsync();

// Get the reference of the BLOB

BlobClient blobClient = blobContainerClient.GetBlobClient(fileName);

var folder = Path.Combine(Directory.GetCurrentDirectory(), "Files");

string finalPath = Path.Combine(folder,fileName);

using (var fs = new FileStream(finalPath, FileMode.Create))

{

await file.CopyToAsync(fs);

}

// Upload file

await blobClient.UploadAsync(finalPath, true);

return Ok("File Uploaded Successfully");

}

catch (Exception ex)

{

return Ok($"File Uploaded Failed {ex.Message}");

}

}

/// The file extension must be csv

private bool CheckFileExtension(IFormFile file)

{

string[] extensions = new string[] { "csv" };

var fileNameExtension = file.FileName.Split(".")[1];

if (string.IsNullOrEmpty(fileNameExtension) ||

!extensions.Contains(fileNameExtensioUplaofn))

{

return false;

}

return true;

}

/// Check the file size, it must be less than 10 mb

private bool CheckFileSize(IFormFile file)

{

if (file.Length > 1e+7)

{

return false;

}

return true;

}

}

}

Listing 3: The CSVFileUploadController

The code in Listing 3 contains an action method of name UploadFile(). This method accepts an uploaded file from the client application using the IFromFile interface. Furter this method reads uploaded file name using the FileName property of the IFromFile. The action method then reads the BLOB Connection string and the container name from the appsettings.json. Since we are restricting the uploaded file extension to .csv the CheckFileExtension() method is used to check if the uploaded file is CSV or not if the file is not csv file then the error response will be sent back. The action method further uses the CheckFileSize() method to verify if the file size is not more than 10 MB. The action method uses the BlobContainerClient class to connect and authenticate with the Azure Storage Blob container so that they can be manipulated. This class creates the container if not already exist, in our case, the name of the container created will be csvcontainer. The GetBlobClien() method of the BlobContainerClient class is used to create an instance of the BlobClient class. This class is used to manipulate the Blob in the Azure Blob Storage. Now the action method will store the uploaded file on the server and then it will be uploaded to the Azure Blob Storage using the UploadAsync() method of the BlobClient class.

The structure of the CSV File is as follows

BusinessEntityID PersonType NameStyle FirstName LastName EmailPromotion

Let's test the API, I have used Postman to upload the file. In the postman hit the API URL. Make sure that the Content-Type is multipart/form-data and in the Body pass the CSV file as shown in Figure 4

Figure 4: Upload file using Postman

Once the Send button is clicked, the file will be uploaded to the Azure Blob container as shown in Figure 5

Figure 5: File uploaded in Azure Blob Storage

So, far we completed the step for uploading the CSV file in the Azure Blob Storage. But now we need to add an Azure Function, a serverless process to read the file from the Azure Blob storage and add its contents in the PersonInfo table of PersonDb database of Azure SQL. Let's add a new Azure Function project of the name ProcessFunction to the same solution and make sure that the BlobTrigger is selected as shown in Figure 6

Figure 6: The Azure Function with the BlobTrigger

Step 4: Since the Azure Function will use EntityFrameworkCore to connect to Azure SQL for performing write operations we need to add the following NuGet packages to the Azure function project.

Microsoft.EntityFrameworkCore

Microsoft.EntityFrameworkCore.SqlServer

Microsoft.EntityFrameworkCore.Relational

Microsoft.EntityFrameworkCore.Design

Microsoft.EntityFrameworkCore.Tools

Using the dotnet CLI, generate Models into the Azure Function project as shown in the command in Listing 4

dotnet ef dbcontext scaffold "[AZURE SQL Connection String]" Microsoft.EntityFrameworkCore.SqlServer -o Models

Listing 4: The dotnet scaffolding command

Once this command is successful, the Models folder will be added to the Azure Function project with PPersonInfo.cs and PersonDbContext.cs files in it.

Step 5: We need to copy the connection string from the OnConfiguring() method of the PersonDbContext and paste it to the host.json and local.settings.json so that it can be used by the Azure Function project while running on Azure and locally. We need to add the Azure Blob connection string to these files as shown in Listing 5

local.settings.json

"Values": {

"AzureWebJobsStorage": "[AZURE-BLOB-CONNECTION-STRING]",

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"SqlConnectionString": "[AZURE-SQL-CONNECTION-STRING]"

}

host.json

"Values": {

"AzureWebJobsStorage": "[AZURE-BLOB-CONNECTION-STRING]",

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"SqlConnectionString": "[AZURE-SQL-CONNECTION-STRING]"

}

Listing 5: host.json and local.settings.json ith keys

Step 6: In the Function project, add the following package to access Azure Blob Storage

Microsoft.Azure.WebJobs.Extensions.Storage

Step 7: Rename the Function1.cs to ProcessBlob.cs and add the code in it as shown in Listing 6 in it

using System;

using System.IO;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.Extensions.Logging;

using Microsoft.Azure.Documents.Client;

using System.Threading.Tasks;

using ProcessFunction.Models;

namespace ProcessFunction

{

public class ProcessBlob

{

[FunctionName("BlobProcessor")]

public async Task Run([BlobTrigger("csvcontainer/{name}", Connection = "AzureWebJobsStorage")]Stream myBlob, string name, ILogger log)

{

log.LogInformation($"C# Blob trigger function Processed blob\n Name:{name} \n Size: {myBlob.Length} Bytes");

log.LogInformation($"CSV File");

if(myBlob.Length > 0)

{

using (var reader = new StreamReader(myBlob))

{

var headers = await reader.ReadLineAsync();

var startLineNumber = 1;

var currentLine = await reader.ReadLineAsync();

while (currentLine != null)

{

currentLine = await reader.ReadLineAsync();

await AddLineToTable(currentLine, log);

startLineNumber++;

}

}

}

}

private static async Task AddLineToTable(string line, ILogger log)

{

if (string.IsNullOrWhiteSpace(line))

{

log.LogInformation("The Current Record is empty");

return;

}

var columns = line.Split(',');

var person = new PersonInfo()

{

BusinessEntityId = Convert.ToInt32(columns[0]),

PersonType = columns[1],

NameStyle = Convert.ToInt32(columns[2]),

FirstName = columns[3],

LastName = columns[4],

EmailPromotion = Convert.ToInt32(columns[5])

};

var context = new PersonDbContext();

await context.PersnInfos.AddAsync(person);

await context.SaveChangesAsync();

}

}

}

Listing 6: The Function to Process Blob

The Function BlobProcessor will be triggered when a new Bob is added to the csvcontainer. The Run() method reads the Blob using SrreamReader class. Since the CSV file has the first line (0th index line) as column headers, we are reading the file from the second line, i.e. 1st index line. The ReadLineAsync() method of the StreamReader class will read data from CSV file line-by-line and pass each line to the AddLineToTable() method. This method will split the line by comma (,) to read the value for each header separately, and then each value will be added to the PersonInfo entity class property. This PersonInfo instance is then added to the PersonDbContext which will be eventually saved into the Azure SQL database.

To test the application, run the API and Azure Function project. When the CSV file is uploaded to Azure Blob Storage, the Function will be triggered which will open the CSV file, read data from the file and insert the data into the PersonInfo table. You can open the Azure SQL and query to the PersonInfo table to see the data from the CSV file inserted into the table.

Code for this article can be downloaded from ths link.

Conclusion: Using the Azure functions the background tasks can be easily handled in cloud-based apps. Typically, in the cases where you want to perform some task without the explicit interaction, the Azure Functions are useful.